Contents

What is an API rate limit?

An API rate limit is a restriction set by an API provider that dictates the maximum number of requests a client application or user can make to the API within a specific timeframe. This mechanism acts as a crucial tool for managing API traffic, safeguarding against abuse, and ensuring the stability and consistent performance of the API infrastructure for all consumers. These limits are typically defined by the number of allowable requests within a given period, such as per minute, per hour, or per day.

Why are API rate limits necessary?

API rate limits are implemented for a variety of important reasons, benefiting both the API provider and the wider ecosystem of API consumers:

– Preventing Denial-of-Service (DoS) attacks: Rate limits act as a safeguard against malicious or poorly written clients (usually client code/apps) that might flood the API with excessive or unnecessary requests. This helps prevent denial-of-service attacks, where the API system is overwhelmed and becomes unavailable to legitimate users.

– Ensuring fair usage: By setting limits, API providers can ensure that all consumers have a fair opportunity to access the API resources. Without rate limits, a small number of heavy users could monopolise the API, degrading performance for everyone else.

– Maintaining server stability: Processing a large volume of requests can strain server resources (bandwidth, CPU, memory). Rate limits help to regulate this load, preventing server overload and ensuring the API remains stable for all users.

– Cost management: API providers incur costs for serving requests (e.g., cloud hosting, data transfer). Rate limits help to manage these costs by controlling the overall usage of the API.

– Quality of service (QoS): By preventing abuse and ensuring fair usage, rate limits help provide a consistent and reliable quality of service for all legitimate API consumers.

– Resource protection: Rate limits can help protect specific API endpoints or resources that might be more resource-intensive to serve.

– Scalability planning: Monitoring API usage patterns within the defined rate limits provides valuable data for capacity planning and scaling the API infrastructure as needed.

– Monetisation strategies: For some APIs, different tiers of access with varying rate limits can be part of a monetisation strategy. So if a user wants to get more access to an API, they have to pay/subscribe to a higher plan.

Experience fair and stable API access with Sportmonks’ sports data. Discover our API plans and get started

How API rate limits are implemented

API providers employ various techniques to implement and enforce rate limits. Here are some common approaches:

– Token-based limits: Each client is assigned a unique API key or token. The server tracks the number of requests made by each token within a specific time window. Once the limit is reached for a token, subsequent requests are rejected until the time window resets.

– IP address-based limits: Rate limits can be applied based on the IP address of the client. This is simpler to implement but less granular, since multiple users behind the same network might share the same IP address i.e. connected to the same hotspot.

– User account-based limits: For APIs that require user authentication, rate limits can be tied to individual user accounts. This allows for more control based on user tiers or usage patterns. This can also be used to show a dashboard to the user for their usage stats.

– Combination of factors: Providers often use a combination of these methods. For instance, they might apply a general IP-based limit and a more specific token-based limit for authenticated users.

– Different limits for different endpoints: Some APIs might have varying rate limits for different endpoints based on their resource intensity or criticality. For example, a data-intensive endpoint that returns a huge JSON or media data might have a lower rate limit than a simpler one with just string return data.

– Time windows: Rate limits are always associated with a specific time window (e.g., per second, per minute, per hour, per day). Once the time window expires, the request counter typically resets.

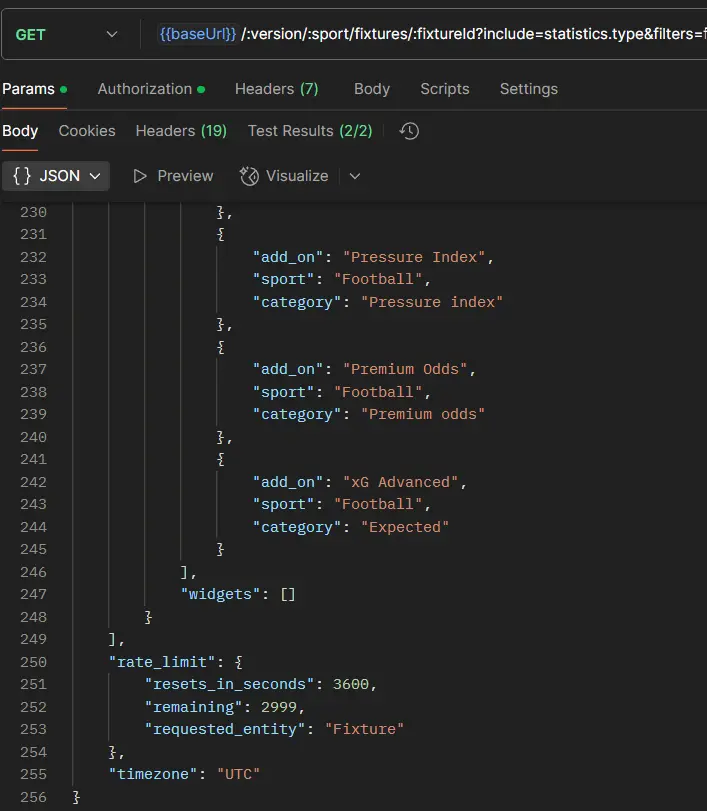

– Headers in responses: API servers usually include specific HTTP headers in their responses to inform clients about the current rate limit status. Sportmonks, for instance, sends back data from its API with a rate_limit object, which helps tell the users how many is left within that window.

Sportmonks API rate limits

The Sportmonks Football API enforces rate limits to ensure fair usage and system stability. By default, all plans include 3,000 API calls per entity per hour. For example, requests to endpoints like fixtures, teams, or players each count toward the respective entity’s limit. If you exceed this limit, you’ll receive a 429 Too Many Requests response, with details in the response’s rate_limit object indicating remaining calls and reset time. If your application requires more API calls, you can upgrade your plan to increase the limit. To do so, visit MySportmonks, select a higher-tier plan (such as the Enterprise Plan for customized limits), or contact our support team at [email protected] for tailored solutions. For more details on rate limits and upgrading, see our rate limiting documentation.

Handling API rate limit errors gracefully

When an API client exceeds the defined rate limit, the server will typically respond with an error status code, often 429 Too Many Requests. It’s important for client applications to handle these errors to avoid service disruptions and provide a better user experience. Here are some best practices for handling rate limit errors:

Detect the error

Your application should be designed to recognise the 429 status code in the API response. Additionally, check for the rate limit-related headers (X-RateLimit-Limit, X-RateLimit-Remaining, X-RateLimit-Reset) to understand the current status and when the limit will reset. For instance, with Sportmonks, an example of handling a 429 error is

const fetchWithRateLimitHandling = async (url) => {

try {

const response = await fetch(url);

if (response.status === 429) {

console.warn('Rate limit exceeded. Please wait until the limit resets.');

return null;

}

const data = await response.json();

// Access rate limit information from the meta object

const rateLimitInfo = data.meta.rate_limit;

const remainingRequests = rateLimitInfo.remaining;

const resetTime = new Date(Date.now() + rateLimitInfo.resets_in_seconds * 1000);

console.log(`Remaining Requests: ${remainingRequests}`);

console.log(`Rate Limit Resets At: ${resetTime.toISOString()}`);

return data;

} catch (error) {

console.error('Error fetching data:', error);

throw error;

}

};

// Example usage:

fetchWithRateLimitHandling('https://api.sportmonks.com/api/v3/football/fixtures?api_token=YOUR_API_TOKEN')

.then(data => {

if (data) {

console.log('Data:', data);

}

})

.catch(err => {

console.error('Error:', err);

});

Implement exponential backoff with jitter

Instead of immediately retrying the request, you should use an exponential backoff strategy. This is waiting for an increasing amount of time between retry attempts. To avoid overwhelming the server with simultaneous retries from multiple clients, introduce a small amount of random delay “jitter” to the backoff interval. For example, the first retry might be after 1 second, the second after 2 seconds, the third after 4 seconds, and so on, with a small random variation added to each delay.

Respect the Retry-After header

Some API servers include a Retry-After header in the 429 response. This header specifies the number of seconds (or a date/time) the client should wait before making another request. Your application should prioritise and adhere to the value provided in this header.

Queue requests

If your application needs to make a series of API calls, consider implementing a request queue. When a rate limit error occurs, instead of dropping requests, add them back to the queue to be retried after an appropriate delay.

Optimise API usage

Review your application’s API usage patterns to identify areas where you can reduce the number of requests. This might involve:

– Batching requests: If the API supports it, combine multiple related actions into a single API call.

– Caching data: Store frequently accessed data locally to avoid redundant API calls. Stuff like user profile image and some other non-sensitive data can be stored locally in the user’s device to prevent refetching it till when next the user logs in or updates that data manually.

– Fetching only necessary data: Use API parameters to request only the specific data your application needs, rather than retrieving large datasets and filtering them client-side. For example, if you only need a particular player data from a team, there’s no need to fetch the entire team data, get only what you need.

Inform the user (If appropriate)

In user-facing applications, provide informative messages when rate limits are encountered. Avoid technical jargon and explain that the application is temporarily unable to perform the action due to high usage and will retry automatically.

Monitor API usage

Implement monitoring in your application to track how often you are hitting rate limits. This can help you identify potential issues and optimise your API integration. A platform like Sportmonks provides a page showing you extensive information about your account’s API requests.

Contact the API provider

If you consistently encounter rate limits that hinder your application’s functionality, consider contacting the API provider to discuss potential solutions, such as requesting a higher rate limit if your use case justifies it.

Consequences of ignoring API rate limits

Failing to respect and handle API rate limits can lead to several negative consequences for the client application and potentially the API provider:

– Temporary blocking or throttling: The most immediate consequence is that your application’s requests will be rejected by the API server, often with a 429 Too Many Requests error. The server might also temporarily throttle your requests, significantly slowing down response times.

– Service disruption: If your application heavily relies on the API, hitting rate limits frequently can lead to service disruptions and a poor user experience. Features that depend on the API might become unavailable or unreliable.

– IP address or API key blocking: Repeatedly exceeding rate limits, especially in an abusive manner, can result in the API provider temporarily or permanently blocking your application’s IP address or API key. This will completely break your application’s functionality.

– Increased latency: Even before being completely blocked, exceeding rate limits can sometimes lead to increased latency as the API server struggles to handle the excessive load.

– Financial penalties: Some API providers, especially those with tiered pricing models, often impose financial penalties for exceeding the agreed-upon rate limits.

– Damage to reputation: If your application’s reliance on an API becomes unreliable due to ignored rate limits, it can damage your application’s reputation and lead to user dissatisfaction.

– Legal ramifications: In extreme cases of violation of the API’s terms of service, ignoring rate limits could potentially lead to legal repercussions.

Strategies for staying within API rate limits

Proactive planning and efficient API usage are key to staying within the defined rate limits and avoiding the negative consequences of exceeding them. Here are some strategies:

– Understand the API’s rate limits: Before integrating with an API, thoroughly review its documentation to understand the specific rate limits for different endpoints and the time windows they apply to. Pay attention to any tiered limits or different rules for authenticated vs. unauthenticated requests.

– Design efficient API calls: Structure your application to make the most efficient use of each API call. Retrieve only the data you need, and avoid making redundant requests.

– Implement caching: Cache frequently accessed and relatively static data locally within your application. This reduces the need to make repeated API calls for the same information. Also take note of data freshness and implement appropriate cache invalidation strategies.

– Batch requests: If the API supports batch operations, group multiple related actions or data retrieval requests into a single API call. This significantly reduces the overall number of requests your application makes.

– Use webhooks or streaming APIs (If available): If the API provider offers webhook notifications or streaming APIs, use these instead of constantly calling for updates. Webhooks allow the server to push updates to your application in real-time, reducing the need for frequent requests.

– Spread out requests: If you need to make a large number of API calls, try to distribute them evenly over time rather than sending them all at once. This can help you stay within per-minute or per-second rate limits.

– Prioritise critical requests: If you anticipate hitting rate limits, prioritise the most critical API calls for your application’s core functionality. Implement strategies to handle less critical requests with more aggressive backoff or by delaying them.

– Authenticate properly: Ensure you are using the correct authentication method (API keys, tokens, etc.) as required by the API. Incorrect or missing credentials can lead to unnecessary retries and potentially contribute to hitting rate limits.

Kick off with Sportmonks’ football API

Dive into the world of football data with live scores, in-depth statistics, and fixtures from leagues across the globe. Whether you’re building a fantasy league, a betting app, or a fan site, Sportmonks provides the data you need. Start your free trial now and score big with your audience!